Ruikun Luo

Ph.D. student in Robotics, University of Michigan, Ann Arbor

Email, Google Scholar, Github, Linkedin

I'm now a 6th year Ph.D. student in Robotics Institute at University of Michigan, working with professor Xi Jessie Yang at the Interaction & Collaboration Research Lab (ICRL).

I received a B.E. in Mechanical Engineering and Automation from Tsinghua University in 2012 and a M.S. in Mechanical Engineering from Carnegie Mellon University in 2014, where I worked with professor Katia Sycara and professor Nilanjan Chakraborty. Prior to joining ICRL, I used to work with professor Dmitry Berenson at the ARM Lab.

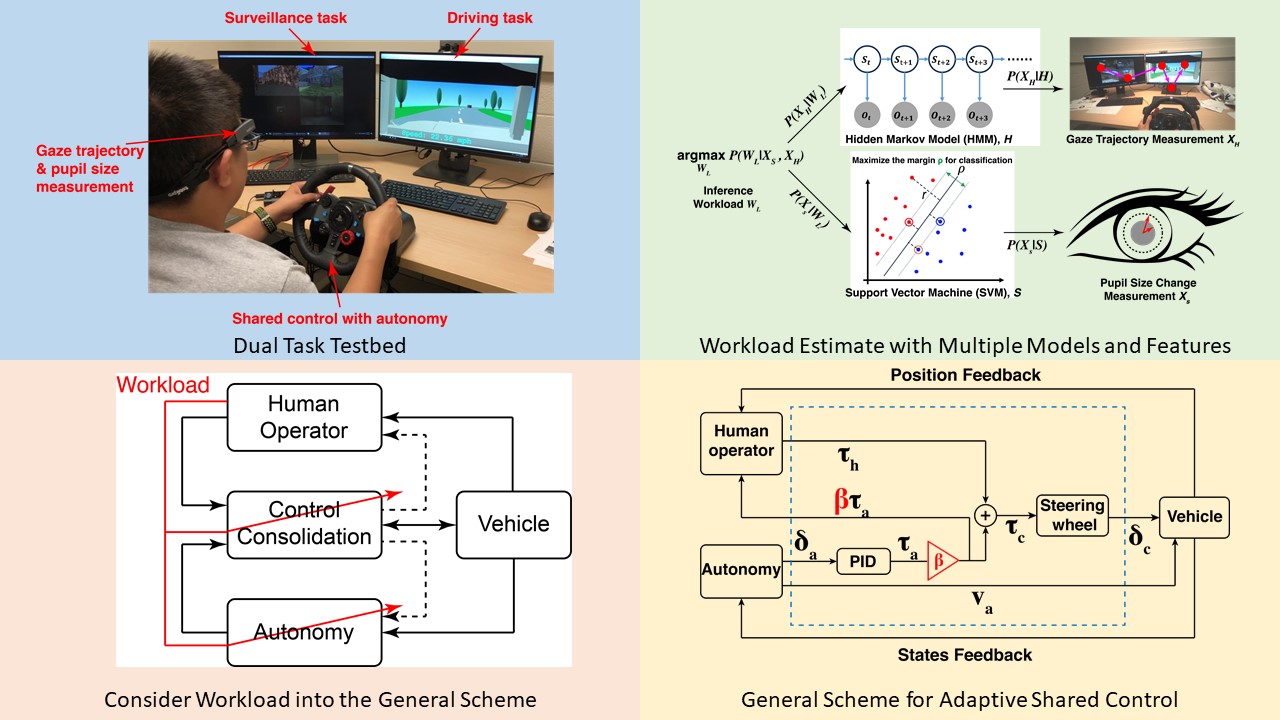

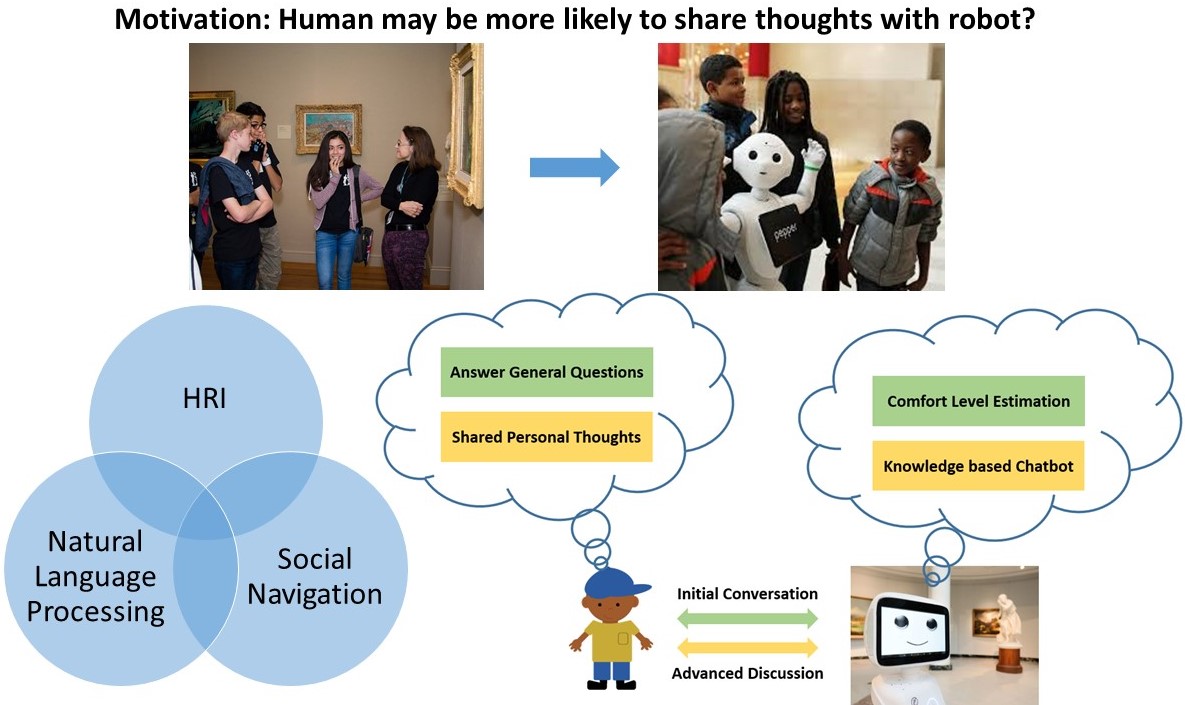

My research interest lies in the intersection of machine learning, robotics and human factors. My current research interest is human robot/AI interaction/collaboration. My research goal is to study how to use machine learning to understand human, how robot makes decisions based on the interred behavior of human and how to make human understand robot/AI.